Automata theory has several real-world applications in a variety of domains since it deals with abstract machines and the issues they might resolve. Automata theory is important in the following domains:

Computer Science and Programming Languages:

Automata play a crucial role in compiler design, particularly in lexical analysis. Token recognition in source code is achieved by using finite automata to implement patterns defined by regular expressions.

Verifying Syntax Pushdown automata and context-free grammars aid in the parsing and comprehension of programming language structure, ensuring that code follows proper syntax.

NLP, or natural language processing,

Text Parsing: To analyze and comprehend human language, finite state machines and probabilistic automata are employed in a variety of natural language processing (NLP) applications, including named entity recognition and part-of-speech tagging.

Speech Recognition: To describe sound sequences, speech recognition systems frequently employ Hidden Markov Models (HMMs), a kind of probabilistic automaton.

Network Protocols:

Protocol Verification: The use of automata theory facilitates the modeling and verification of communication systems and network protocols to make sure they function as intended under various circumstances.

Finite state machines can be used to model and analyze network traffic patterns, which can aid in performance monitoring and security.

Systems of Control:

Automata are utilized in the design of control and embedded systems, including those seen in robotics and automation. Systems states and transitions can be modeled and controlled with the use of finite state machines.

Game Creation:

Behavior Modeling: Character and game element behavior is created and managed using automata models, such as finite state machines, to enable more dynamic and responsive interactions.

The field of bioinformatics

Sequence Analysis: In bioinformatics, automata are utilized for sequence alignment and analysis, including pattern recognition in protein structures or DNA sequences.

Design of Hardware:

Digital Circuit Design: To ensure that digital circuits and controllers function properly under a variety of circumstances, finite state machines are utilized in their design and implementation.

Robotics and Automation:

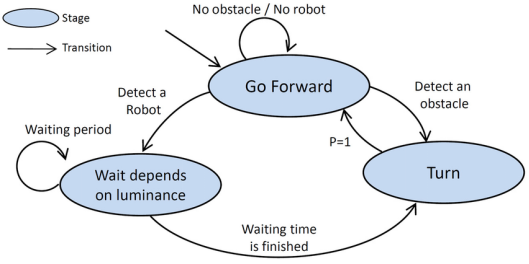

Path Planning: Finite state machines and other automata are used in robotics for path planning and obstacle avoidance. They help robots navigate complex environments by defining states for different stages of movement and decision-making.

Artificial Intelligence:

Behavior Trees: In AI, particularly in game AI, behavior trees use principles from automata theory to manage complex behaviors and decision-making processes in a structured way.

Data Compression:

Algorithm Design: Automata are used in algorithms for data compression. For example, the Lempel-Ziv-Welch (LZW) algorithm, which is used in file compression formats like GIF, relies on concepts from automata theory to efficiently encode data.

Text Search Algorithms:

Pattern Matching: Automata are fundamental to efficient text search algorithms. For instance, the Aho-Corasick algorithm uses a finite state machine to search for multiple patterns simultaneously in a text, making it highly efficient for applications like searching in large databases.

Cryptography:

Random Number Generation: Some cryptographic systems use automata to generate pseudorandom sequences of numbers. Linear feedback shift registers (LFSRs), which are a type of finite state machine, are commonly used in cryptographic applications for secure key generation and random number generation.